The Future is Now: How AI at the Edge is Revolutionizing Industrial IoT

|

|

Time to read 6 min

|

|

Time to read 6 min

This guide explores the transformative power of ai at the edge.

We explain what edge ai is and how dedicated hardware like NPUs (Neural Processing Units) makes iot machine learning possible on local devices.

Discover powerful edge ai use cases, from predictive maintenance using anomaly detection to real-time computer vision. This is your introduction to the next frontier of industrial automation.

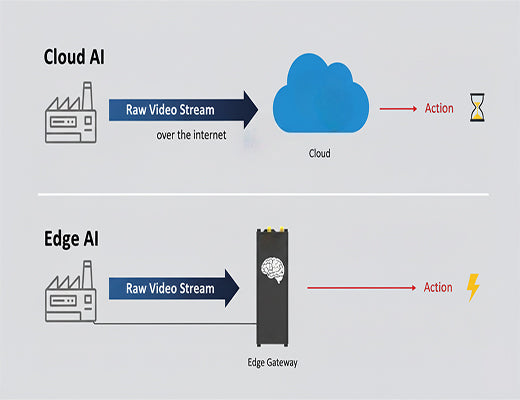

For years, Artificial Intelligence was something that happened in a massive, distant data center. It was a slow, expensive conversation. You'd send your data on a long journey to the cloud and, minutes or even hours later, get a useful answer back. But in the industrial world, a minute is an eternity. A minute can be a thousand faulty products off the line or a critical machine failure. The cloud AI model was simply too slow for real-world action.

That era is over. Welcome to the world of ai at the edge, where intelligence is no longer remote, but instantaneous. This isn't science fiction; it's the new reality of industrial IoT, where devices can see, understand, and react to the world around them in real-time.

Edge AI is the practice of running artificial intelligence algorithms and machine learning models directly on a local hardware device—at the "edge" of the network, close to the data source . Instead of just collecting data and sending it to the cloud for analysis, the edge device itself performs the analysis, a process known as data inference.

The fundamental change is this:

Old Model (Cloud AI): "Here is a 10-minute video file of my conveyor belt. Please analyze it and tell me if you see anything wrong."

New Model (Edge AI): The edge device asks itself, "Am I seeing a defect right now?" a thousand times per second, and only reports back, "Yes, at timestamp 10:32:04, a faulty product was detected."

This shift from passive data transport to active, local analysis is the core of the edge ai revolution.

Running complex AI models requires a special kind of processing power. While a standard CPU can do the job, it's often too slow and power-hungry to be practical for a compact industrial device. This is where specialized hardware comes in.

The real breakthrough for ai at the edge is the integration of the NPU (Neural Processing Unit) into industrial gateways . An NPU is a specialized microprocessor designed for one job: to execute the mathematical operations required by AI models with extreme speed and efficiency . For example, the Robustel EG5120's integrated NPU provides 2.3 TOPS (trillion operations per second) of dedicated AI processing power, allowing it to run complex models without bogging down the main CPU .

Hardware is only half the story. The software ecosystem has also matured. Frameworks like TensorFlow Lite allow developers to take large, powerful AI models trained in the cloud and optimize them to run efficiently on resource-constrained edge devices. This, combined with technologies like Docker, makes deploying an

iot machine learning model on a device like the EG5120 easier than ever before .

Learn More:

A Deep Dive into IoT Edge Devices

This isn't just theory. Here are three powerful edge ai use cases that are delivering real value right now.

A factory wants to prevent costly, unplanned downtime on its critical machinery.

How Edge AI Helps: An edge gateway is connected to vibration and acoustic sensors on a machine. It runs an anomaly detection model locally that has been trained to understand the machine's normal operating sounds and vibrations.

The Result: The moment the model detects a subtle, abnormal pattern that a human would miss, it triggers a maintenance alert. The system catches failures before they happen, turning unplanned downtime into scheduled maintenance .

A bottling plant needs to ensure every single bottle cap is sealed correctly, at a rate of dozens per second.

How Edge AI Helps: A high-speed camera is connected to an edge device with a powerful NPU. The device runs a computer vision model that instantly analyzes each image of a bottle cap.

The Result: If a cap is misaligned or defective, the edge device sends a signal to an actuator that kicks the faulty bottle off the line in milliseconds. This process is too fast for a round-trip to the cloud, making it a perfect use case for edge ai .

Modern warehouses rely on fleets of Automated Guided Vehicles (AGVs) to move goods.

How Edge AI Helps: Each AGV is equipped with an edge computer that processes data from its cameras and LiDAR sensors. It runs AI models for object recognition, pathfinding, and collision avoidance.

The Result: The AGV can navigate its environment safely and efficiently without needing constant instructions from a central server. This local decision-making is what makes true autonomous systems possible.

Why should you, as a business leader, care about running iot machine learning models locally? Here are the key business benefits:

Unprecedented Speed and Real-Time Response: For applications that involve safety, automation, or quality control, the near-zero latency of edge ai is not just a nice-to-have; it's a core requirement.

Enhanced Security for Proprietary Models and Data: Your AI models and the operational data they analyze are incredibly valuable intellectual property. Processing them locally on a secure edge device minimizes their exposure to external networks.

Massive Cost Reduction on Cloud AI Services: Cloud-based AI inference services can be very expensive, especially at scale. Performing inference at the edge significantly reduces your reliance on these services, leading to a much lower Total Cost of Ownership (TCO).

Learn More:

Edge AI is the practice of running AI and machine learning models directly on a local hardware device (at the "edge" of the network), rather than in a centralized cloud. This allows for real-time analysis and decision-making without internet latency.

An NPU (Neural Processing Unit) is a specialized processor optimized for the types of calculations used in AI models. It works alongside the main CPU, accelerating AI tasks like data inference so they can be performed much faster and more efficiently than on a general-purpose processor alone.

The main benefits are speed (real-time results with low latency), cost savings (reduced data transmission to the cloud), reliability (can operate without an internet connection), and enhanced security (sensitive data and AI models remain on-premise).