Edge Computing vs. Cloud Computing: Which is Right for Your IoT Data?

|

|

Time to read 5 min

|

|

Time to read 5 min

The debate over edge vs. cloud computing for IoT data is not about choosing a winner, but about assigning the right task to the right location.

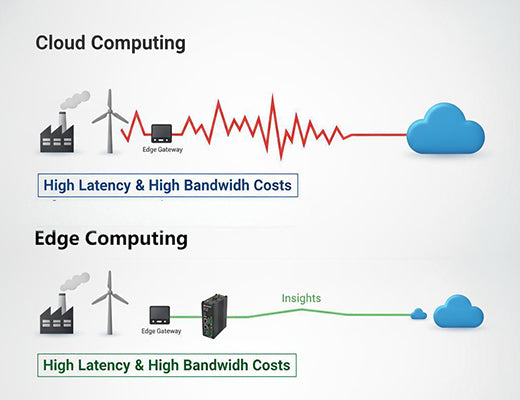

Cloud computing offers immense, centralized processing power, ideal for big data analytics. Edge computing provides decentralized, real-time processing right at the data source, perfect for low-latency control and immediate insights.

For most industrial applications, the optimal solution is not one or the other, but a powerful hybrid model that leverages the unique strengths of both.

Imagine you're an author writing a book. You have two options for your research. You could use a massive, central library located across the country (the cloud). It has every book imaginable and unlimited space, but it takes time to travel there and get the information you need. Or, you could use the powerful computer and reference books on your local desk (the edge). It's incredibly fast and always available, but has limited storage.

Which one do you choose? The smart answer, of course, is both. You use your local desk for the immediate, day-to-day writing and quick lookups, and the central library for deep, heavy-duty research.

This is the perfect analogy for the edge vs. cloud computing debate in IoT. Let's be clear: this isn't just a philosophical question. It's a critical architectural decision that will have a massive impact on your system's performance, cost, and reliability.

The traditional cloud computing model for IoT is straightforward. All the data generated by your sensors, PLCs, and devices—every temperature reading, every vibration measurement, every camera frame—is collected and sent over the internet to a centralized server in a data center (e.g., hosted on Amazon Web Services, Microsoft Azure, or Google Cloud).

The Pros:

Edge computing flips the model on its head. Instead of sending data far away, it brings the computation to the data. Processing is handled locally, on a powerful device called an IoT Edge Gateway, right on the factory floor or at the remote site.

The Pros:

Learn more in our main guide:

Feature |

Cloud Computing (Centralized) |

Edge Computing (Decentralized) |

Latency |

High (100s of ms to seconds) |

Ultra-Low (milliseconds) |

Bandwidth Cost |

High (all raw data is sent) |

Low (only insights are sent) |

Offline Reliability |

None (requires constant internet) |

High (can operate autonomously) |

Scalability |

Near-infinite processing power |

Limited by local hardware |

Best For... |

Big Data Analytics, Long-Term Storage, Fleet Management |

Real-Time Control, Data Filtering, Immediate Alerts, AI Inference |

So, who wins the edge vs. cloud computing battle? The real 'aha!' moment is realizing it's not a battle at all. It's a partnership.

The most powerful, efficient, and scalable IoT architecture is a hybrid model that uses both the edge and the cloud for what they do best.

This hybrid model is enabled by a new class of powerful edge hardware. A device like an industrial edge gateway is the key to bringing cloud-native technologies like Docker and advanced analytics right to the factory floor.

The question is not "Should I choose edge or cloud computing for my IoT project?" The right question is "Which of my data processing workloads belong on the edge, and which belong in the cloud?"

By adopting a hybrid architecture, you can build a solution that is simultaneously fast and powerful, resilient and scalable, secure and cost-effective. You get the immediate responsiveness of a local system with the immense analytical power of the cloud. And that is the true foundation of a successful modern IIoT deployment.

Yes, absolutely. An edge gateway can be configured to run a completely autonomous local control and monitoring system. However, it would lose the benefits of the cloud, such as centralized remote management, fleet-wide OTA updates, and large-scale historical data analysis.

It's a different cost structure. Edge computing requires an upfront capital expense (CapEx) for the gateway hardware. However, by processing data locally, it can dramatically reduce the long-term operational expenses (OpEx) of cellular data transfer and cloud storage. For many industrial applications, this results in a significantly lower Total Cost of Ownership (TCO).

It fits perfectly in the hybrid model. The typical workflow is to use the massive processing power of the cloud to train a complex AI model on a huge dataset. Then, a smaller, optimized version of that model is deployed to the edge gateway, which uses its local processing power (like an NPU) to run the model and perform real-time inference (making predictions) on live data.