A Guide to TinyML and Edge AI for Vision Applications in 2025

|

|

Time to read 5 min

|

|

Time to read 5 min

In the world of industrial automation and smart cities, TinyML and Edge AI for vision applications are transforming how we interact with the physical world. From real-time quality control on a production line to traffic monitoring on a busy street, the ability to perform computer vision locally is a game-changer.

This guide is for engineers and developers looking to understand this powerful technology.

We'll break down the differences between TinyML and Edge AI, explore their real-world applications, and explain why a purpose-built industrial gateway with a dedicated Neural Processing Unit (NPU), like the Robustel EG5120, is the essential hardware for turning your vision-based AI concepts into reality.

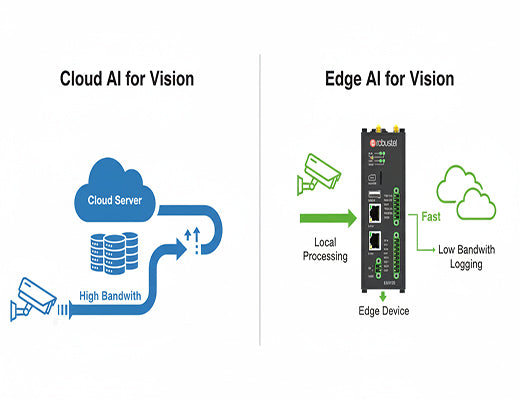

I've spoken with countless operations managers who have a clear goal: they want to "see" what's happening in their remote operations. They want to automatically detect defects on a conveyor belt, count the number of people entering a secured area, or read license plates in a parking facility. For years, the only option was to stream massive amounts of video data to the cloud for analysis. This approach was slow, expensive, and often unreliable.

But what if the device on-site could not only see, but understand what it was seeing in real-time? This is the revolutionary power of TinyML and Edge AI for vision. By deploying AI models directly on hardware at the edge, we can perform complex image recognition and object detection with millisecond-level latency. Let's be clear: this technology is moving from the lab to the factory floor, and choosing the right hardware is the key to a successful deployment. This is a core application for any modern Industrial IoT Edge Gateway.

While often used together, these terms refer to two different scales of edge intelligence.

For any serious industrial machine vision application—like analyzing a high-resolution video stream—you are firmly in the world of Edge AI. You need a device with the processing power to handle the workload.

To run Edge AI for computer vision, a standard CPU is not enough. You need a device with a Neural Processing Unit (NPU), a specialized processor designed to accelerate the mathematical operations used in AI models.

The Robustel EG5120 is purpose-built for these tasks.

Let's make this tangible. Imagine a bottling plant where you need to ensure every bottle has a cap.

This entire process is a perfect example of Edge AI for vision: it's fast, reliable, and incredibly data-efficient.

The world of TinyML and Edge AI for vision is unlocking unprecedented capabilities for industrial automation and smart infrastructure. While TinyML is perfect for simple, low-power tasks, true industrial machine vision requires the power of Edge AI. A successful deployment depends on choosing hardware that is not only powerful but also rugged, secure, and easy to manage. By leveraging an edge gateway with an NPU like the Robustel EG5120, you get a production-ready platform that provides the processing power, software flexibility, and industrial reliability needed to transform your computer vision projects from a proof-of-concept into a scalable, real-world solution.

A1: TOPS stands for "Trillions of Operations Per Second." It's a measure of the raw processing power of an AI accelerator. A higher TOPS number generally means the device can run more complex AI models or process data (like video frames) at a faster rate.

A2: Not necessarily. While model training requires data science skills, deploying a pre-trained model (e.g., from TensorFlow Hub) on the EG5120 is a more straightforward process for a developer familiar with Linux and Docker. The NXP eIQ™ toolkit also provides tools to simplify the process of converting and optimizing models for the NPU.

A3: Yes. The EG5120's Gigabit Ethernet ports allow it to connect directly to any standard IP camera. For cameras with a serial interface, its RS232/RS485 ports can be used. This flexibility makes it an ideal edge gateway with an NPU for retrofitting intelligence into existing camera systems.